Download the transcript of this interview.

For this episode of Platypod, I talked to Dr. Tanja Ahlin about her research, work, and academic trajectory. She’s currently a postdoctoral researcher at the University of Amsterdam in the Netherlands, and her work focuses on intersections of medical anthropology, social robots, and artificial intelligence. I told her of my perspective as a grad student, making plans and deciding what routes to take to be successful in my field. Dr. Ahlin was very generous in sharing her stories and experiences, which I’m sure are helpful to other grad students as well. Enjoy this episode, and contact us if you have questions, thoughts, or suggestions for other episodes.

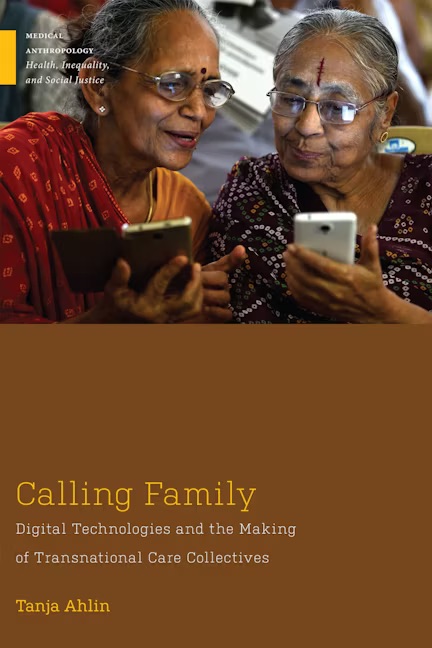

Dr. Tanja Ahlin, image from her personal website.

Dr. Tanja Ahlin is a medical anthropologist and STS scholar with a background in translation. She has translated books about technology and more. She has a master’s degree in medical anthropology, focusing on the topic of health and society in South Asia. Dr. Ahlin has been interested in e-health/telehealth for a long time, before the recent COVID-19 pandemic years, in which those words became part of our daily vocabulary. Her Ph.D., which she concluded at the University of Amsterdam, has focused on everyday digital technologies in elder care at a distance. Her Ph.D. research is being published as a book at Rutgers University Press. The book will be available for purchase starting on August 11, 2023.

Calling Family – Digital Technologies and the Making of Transnational Care Collectives | Rutgers University Press

In our conversation, we talked about Dr. Ahlin’s blog focusing on the Anthropology of Data and AI. This project—in which Dr. Ahlin writes about the intersection of tech and different fields such as robotics, policy, ethics, health, and ethnography—is a kind of translation work, since Dr. Ahlin is writing about complex topics to a broader audience who are not familiar with some STS and anthropological concepts and discussions. “The blog posts are not supposed to be very long. I aim for two to four minutes of reading … I realized that people often don’t have time to read more than that, right?” says Dr. Tanja Ahlin.

Dr. Ahlin’s book is based on ten years of ethnographic research with Indian transnational families. These are families where family members live all around the world. The reason for migration is mostly due to work opportunities abroad. In her research, Dr. Ahlin looked at how these families used all kinds of technologies like mobile phones and webcams, the Internet, and Whatsapp, not only to keep in touch with each other but also to provide care at a distance. Dr. Ahlin conducted interviews with nurses living all around the world, from the US to Canada to the UK, the Maldives, and Australia. This varied and diverse field gave origin to the concept of field events that Dr. Ahlin develops in her work. In her work, Dr. Ahlin also developed the notion of transnational care collective to show how care is reconceptualized when it has to be done at a distance.

In sum, this episode of Platypod highlights how anthropologists come from different backgrounds and gives an honest overview of how we get to research our topics and occupy the spaces we do. We do not have linear stories, and that does not determine our potential. We at Platypod are very thankful for Dr. Ahlin’s time and generosity.

By Gordon Hull

Large Language Models (LLMs) like Chat-GPT burst into public consciousness sometime in the second half of last year, and Chat-GPT’s impressive results have led to a wave of concern about the future viability of any profession that depends on writing, or on teaching writing in education. A lot of this is hype, but one issue that is emerging is the role of AI authorship in academic and other publications; there’s already a handful of submissions that list AI co-authors. An editorial in Nature published on Feb. 3 outlines the scope of the issues at hand:

“This technology has far-reaching consequences for science and society. Researchers and others have already used ChatGPT and other large language models to write essays and talks, summarize literature, draft and improve papers, as well as identify research gaps and write computer code, including statistical analyses. Soon this technology will evolve to the point that it can design experiments, write and complete manuscripts, conduct peer review and support editorial decisions to accept or reject manuscripts”

As a result:

“Conversational AI is likely to revolutionize research practices and publishing, creating both opportunities and concerns. It might accelerate the innovation process, shorten time-to-publication and, by helping people to write fluently, make science more equitable and increase the diversity of scientific perspectives. However, it could also degrade the quality and transparency of research and fundamentally alter our autonomy as human researchers. ChatGPT and other LLMs produce text that is convincing, but often wrong, so their use can distort scientific facts and spread misinformation.”

The editorial then gives examples of LLM-based problems with incomplete results, bad generalizations, inaccurate summaries, and other easily-generated problems. It emphasizes accountability (for the content of material: the use of AI should be clearly documented) and the need for the development of truly open AI products as part of a push toward transparency.

There’s lots of examples of LLMs misbehaving, but here’s one that should be alarming about the danger of trusting AI based research from Jeremy Faust of MedPage Today. Faust asked OpenAI to diagnose a patient that he described (using medical jargon) as “age 35, female, no past medical history, presents with chest pain which is pleuritic -- worse with breathing -- and she takes oral contraception pills. What's the most likely diagnosis?” The AI did really well with the diagnosis, but it also reported that the condition was “exacerbated by the use of oral contraceptive pills.” Faust had never heard this before, so he asked the AI for its source. Things went rapidly downhill:

“OpenAI came up with this study in the European Journal of Internal Medicine that was supposedly saying that. I went on Google and I couldn't find it. I went on PubMed and I couldn't find it. I asked OpenAI to give me a reference for that, and it spits out what looks like a reference. I look up that, and it's made up. That's not a real paper. It took a real journal, the European Journal of Internal Medicine. It took the last names and first names, I think, of authors who have published in said journal. And it confabulated out of thin air a study that would apparently support this viewpoint.”

So much for the Chat GPT lit review.

Journal editors have been trying to get ahead of matters. Eric Topol has been following these developments, of which I’ll extract a bit here. Nature – and the other Springer Nature journals – just established the following policy:

“First, no LLM tool will be accepted as a credited author on a research paper. That is because any attribution of authorship carries with it accountability for the work, and AI tools cannot take such responsibility. Second, researchers using LLM tools should document this use in the methods or acknowledgements sections. If a paper does not include these sections, the introduction or another appropriate section can be used to document the use of the LLM”

The editorial announcing this policy also speaks in terms of the credibility of the scientific enterprise: “researchers should ask themselves how the transparency and trust-worthiness that the process of generating knowledge relies on can be maintained if they or their colleagues use software that works in a fundamentally opaque manner.” Science has updated its policies “to specify that text generated by ChatGPT (or any other AI tools) cannot be used in the work, nor can figures, images, or graphics be the products of such tools. And an AI program cannot be an author. A violation of these policies will constitute scientific misconduct no different from altered images or plagiarism of existing works.” The JAMA network journals have similarly added to their “author responsibilities” that “Nonhuman artificial intelligence, language models, machine learning, or similar technologies do not qualify for authorship” and that:

“If these models or tools are used to create content or assist with writing or manuscript preparation, authors must take responsibility for the integrity of the content generated by these tools. Authors should report the use of artificial intelligence, language models, machine learning, or similar technologies to create content or assist with writing or editing of manuscripts in the Acknowledgment section or the Methods section if this is part of formal research design or methods”

One hesitates to speak of a “consensus” at this point, but these are three of the most influential journals in science and medicine, and so it’s at least going to be very influential.

There is also now call for a more nuanced conversation; in a new white paper, Ryan Jenkins and Patrick Lin argue that Nature’s policy is “hasty.” On the one hand, reducing AI to the acknowledgments page could understate and obscure its role in a paper, undermining the original role of transparency. On the other hand, the accountability argument strikes them as too unsubtle. “For instance, authors are sometimes posthumously credited, even though they cannot presently be held accountable for what they said when alive, nor can they approve of a posthumous submission of a manuscript; yet it would clearly be hasty to forbid the submission or publication of posthumous works.” They thus argue for assessing matters on a continuum with two axes: continuity (“How substantially are the contributions of AI writers carried through to the final product?”) and creditworthiness (“Is this the kind of product a human author would normally receive credit for?”) (9)

Some of these concerns will be recognizable from pre-AI authorship debates. For example, it is difficult to know when someone’s contribution to a paper is sufficient to warrant crediting them as an author, particularly for scientific work that draws on a lot of different anterior processes, subparts, and so forth. Authorship rules have also tended to discount the labor of those whose material support has been vital to the outcome (such as childcare workers) or whose involvement and creative contribution have been essential in the development of the project (this is a particular concern when researchers go and study disadvantaged communities). In both cases, institutional practices seem complicit in perpetuating unjustified (and inaccurate) understandings of how knowledge is produced. Max Liboiron’s papers try to redress this with much longer author-lists than one ordinarily expect as part a broader effort to decolonize scientific practices.

I mention Liboiron, because, at the risk of sounding old-fashioned, something that seems important to me is that the people they include are, well, people, and in particular people facing structural injustice. Lin’s concern with posthumous authorship isn’t about living people, but it is about personhood more broadly. Of course I am not going to argue that metaphysical personhood is either interesting or relevant in this context. What I am going to argue is that there is an issue of legal personhood that underlies the question of accountability and authorship. In other words, whether AI should be an author is basically the question of whether it makes sense to assign personhood to it, at least in this context. It seems to me that this is what should drive questions about AI authorship, and not either metaphysical questions about whether AI “is” and author, or questions about the extent to which its output resembles that of a person.

In Foucauldian terms, “author” is a political category, and we have historically used it precisely to negotiate accountability for creation. As Foucault writes in his “What is an Author” essay, authorship is a historically specific function, and “texts, books, and discourses really begin to have authors … to the extent that authors became subject to punishment, that is, to the extent that discourse could be transgressive” (Reader, 108). In other words, it’s about accountability and individuation: “The coming into being of the notion of ‘author’ constitutes the privileged moment of individualization in the history of ideas, knowledge, literature, philosophy, and the sciences” (101). We see this part of the author function at work in intellectual property, where the “author” is also the person who can get paid for the work (there’s litigation brewing in the IP-world about AI authorship and invention). As works-for-hire doctrine indicates, the person who actually produces the work may not ever be the author: if I write code for Microsoft for a living, I am probably not the author of the code I write. Microsoft is.

Given that “author” names a political and juridical function, it seems to me that there are three reasons to hesitate about assigning the term “author” to an AI, which I’ll start on next time.